Standing Strong: Surviving the AWS Outage

In the early hours of 20 October 2025, a major Amazon Web Services (AWS) outage rippled across the internet. Popular platforms from WhatsApp, Snapchat and Fortnite to Amazon’s own Alexa and Ring, went dark. For a few tense hours, parts of the digital world simply stopped working.

Incidents like this remind us how deeply our lives and businesses depend on cloud infrastructure. When one of the world’s largest providers stumbles, the effects are felt everywhere.

Yet amid the disruption, we’re happy to say: Our systems stood strong.

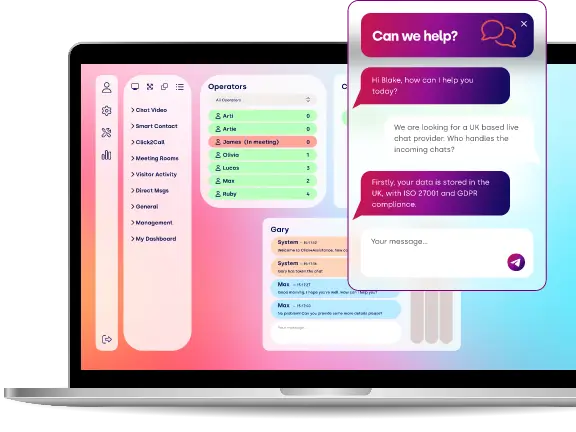

We were not unscathed: the social media functionality such as WhatsApp integration was affected due to the 3rd party provider. However, primarily Click4Assistance operates outside of AMS (https://www.click4assistance.co.uk/servicestatus) and was able to continue to provide our live chat services uninterrupted.

But this is in no way a victory lap; it’s a moment for reflection. Here’s what today’s outage revealed, not just about AWS, but about modern cloud reliance and what it really means to build systems that can stand strong.

What Happened: A Quick Recap

Below is a summary of what is known so far (as of the time of writing):

- The first public signs of trouble emerged around 03:11 a.m. Eastern Time (ET) when users and status dashboards reported increased error rates, latencies, and degraded performance in the US-EAST-1 (Northern Virginia) AWS region. TechRadar+3AP News+3Reuters+3

- The outage cascaded quickly. Many services that depend on AWS infrastructure, either for compute, storage, database, identity, or DNS, started failing entirely or intermittently. DataCenterKnowledge+4The Verge+4AP News+4

- AWS engineers identified that the root of the issue was linked to DNS resolution problems for the DynamoDB API endpoint in US-EAST-1. Tom's Guide+6AP News+6The Verge+6

- As time passed, mitigations were applied. By ~05:27 a.m. ET, AWS reported “significant signs of recovery” and said that most requests were succeeding again. DataCenterKnowledge+6AP News+6Reuters+6

- Later updates declared that the underlying issue had been fully mitigated, though residual throttling and queued request backlogs were expected. Techloy+5Newsweek+5AP News+5

- AWS plans to publish a Post-Event Summary (PES) detailing the scope, root causes, contributing factors, and remediation steps. Amazon Web Services, Inc.

The outage spanned roughly 2 to 3 hours from peak disruption to substantial recovery, however for some services and users, lingering effects may persist beyond that.

Who Was Affected (and How Badly)

Because AWS underpins such a wide portion of the Internet’s infrastructure stack, the fallout was vast and diverse:

- Social & Messaging / Entertainment: Snapchat, Roblox, Fortnite, Signal, Discord, etc. Many users were unable to log in, load content, or interact. Cybernews+8AP News+8The Verge+8

- Amazon’s own services: Amazon’s retail site, Alexa smart home, Ring, Kindle, and more experienced failures or degraded performance. Newsweek+7AP News+7The Verge+7

- Banks, Finance, and Payment Services: In the UK, users reported issues with Lloyds, Bank of Scotland, Halifax, and accessing HMRC services. Reuters+3The Guardian+3AP News+3

- Enterprise and SaaS applications: Tools like Canva, Airtable, Slack, Duolingo, and more faced outages or degraded behaviour. The Guardian+4The Verge+4AP News+4

- Cascading dependencies: Many services rely on global features or shared AWS endpoints (e.g. IAM, global tables, DNS). Disruptions in US-EAST-1 propagated across regions and to seemingly unrelated services. AP News+4TechRadar+4DataCenterKnowledge+4

The failure was less about a single service going down, and more about domain resolution and routing failure i.e. components responsible for directing traffic to correct services were broken, not necessarily the data itself. Because of that, many systems simply couldn’t ‘find’ their backends even if the storage or compute was intact.

Why This Matters (and What It Reveals)

1. Cloud Monoculture is Fallible

So much digital infrastructure is provided by a few major cloud providers (Amazon, Microsoft, Google). When one of them fails, a huge swathe of the Internet feels it immediately.

2. DNS is Still Fragile

DNS resolution is at the heart of nearly all web routing. When DNS misbehaves, very little downstream works. This outage underscores that infrastructure services (like DNS) are critical.

3. Recovery is Complex & Non-Instant

Even after the root issue is mitigated, systems have to recover: queued requests, retries, throttling, all take time.

4. Trust & Reputation Are Fragile

For AWS, trust is everything. Every outage chips away at confidence, especially for mission-critical customers (banks, health systems, government). The post-outage communication, transparency, and remediation matter as much as the fix.

Lessons & Best Practices for the Future

Whether you're an SME or a big enterprise, here's what to take away:

|

Recommendation |

Why It Helps |

How to Do It |

|

Test disaster recovery often |

A plan is only as good as your ability to execute it in real life |

Run trials regularly, engineer chaos experiments |

|

Graceful ‘Out of Service’ modes |

Let the user do something rather than failing completely |

Software should provide read-only modes or caching, rather than full outage |

|

Keep communication providers separate |

Spread the risk of all communication channels being unavailable simultaneously |

Use separate providers for telephone and text-based comms |

|

Transparency & communication |

Users are less upset by outage if they know what’s going on |

Maintain status pages, push updates, issue postmortems |

Final Thoughts

Today’s AWS outage is a lesson in systemic risk, architectural humility, and the fragility of a globally connected digital ecosystem.

As computing becomes more central to every facet of life, our infrastructure choices carry outsized consequences. Outages like this one remind us that no matter how advanced our systems are, the fundamental dependencies (DNS, routing, configuration) currently remain our Achilles’ heels.